Open Source AI Gateway

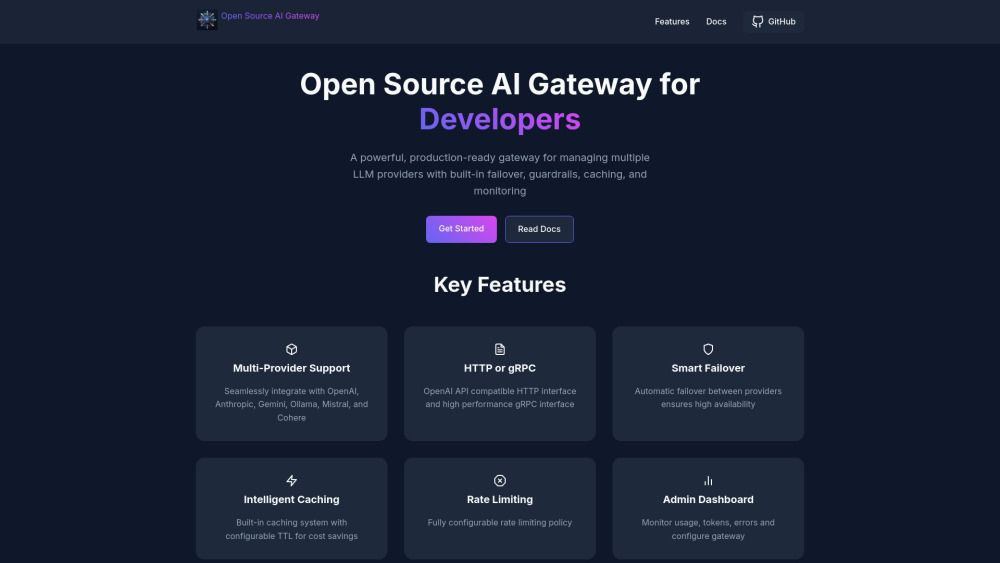

Open Source AI Gateway for Developers

What is Open Source AI Gateway?

The Open Source AI Gateway is a powerful, production-ready solution designed for developers to manage multiple large language model (LLM) providers efficiently. It offers built-in features such as failover, guardrails, caching, and monitoring, making it an ideal choice for applications that require high availability and performance.

Key features of the Open Source AI Gateway include:

Multi-Provider Support: Seamlessly integrate with various LLM providers like OpenAI, Anthropic, Gemini, Ollama, Mistral, and Cohere.

HTTP or gRPC: Compatible with OpenAI API through an HTTP interface and offers a high-performance gRPC interface.

Smart Failover: Automatic failover between providers ensures uninterrupted service.

Intelligent Caching: Built-in caching system with configurable time-to-live (TTL) for cost savings.

Rate Limiting: Fully configurable rate limiting policy to manage usage effectively.

Admin Dashboard: Monitor usage, tokens, errors, and configure the gateway easily.

Content Guardrails: Configurable content filtering and safety measures to ensure compliance.

Enterprise Logging: Integration with tools like Splunk, Datadog, and Elasticsearch for comprehensive logging.

System Prompt Injection: Ability to intercept and inject system prompts for all outgoing requests.

Open Source AI Gateway Features

The Open Source AI Gateway is a powerful, production-ready solution designed for developers to manage multiple large language model (LLM) providers efficiently. It offers a range of features that enhance functionality, including built-in failover mechanisms, guardrails for content safety, caching for performance optimization, and comprehensive monitoring capabilities.

Key features of the Open Source AI Gateway include:

Multi-Provider Support: Seamlessly integrate with various LLM providers such as OpenAI, Anthropic, Gemini, Ollama, Mistral, and Cohere.

HTTP or gRPC: Compatible with OpenAI API through an HTTP interface and offers a high-performance gRPC interface.

Smart Failover: Automatic failover between providers ensures high availability and reliability.

Intelligent Caching: Built-in caching system with configurable time-to-live (TTL) for cost savings.

Rate Limiting: Fully configurable rate limiting policy to manage usage effectively.

Admin Dashboard: Monitor usage, tokens, errors, and configure the gateway settings.

Content Guardrails: Configurable content filtering and safety measures to ensure appropriate outputs.

Enterprise Logging: Integration capabilities with tools like Splunk, Datadog, and Elasticsearch for enhanced logging and monitoring.

System Prompt Injection: Ability to intercept and inject system prompts for all outgoing requests.

Why Open Source AI Gateway?

The Open Source AI Gateway offers a robust solution for developers looking to manage multiple large language model (LLM) providers efficiently. With its production-ready architecture, it ensures high availability and performance through features like smart failover and intelligent caching. This gateway is designed to seamlessly integrate with various providers, including OpenAI, Anthropic, and others, making it a versatile choice for diverse AI applications.

Utilizing the Open Source AI Gateway provides several advantages:

Multi-provider support for flexibility in choosing LLMs.

High performance with both HTTP and gRPC interfaces.

Automatic failover to maintain service continuity.

Cost savings through intelligent caching mechanisms.

Configurable rate limiting to manage API usage effectively.

Comprehensive monitoring through an admin dashboard.

How to Use Open Source AI Gateway

To get started with the Open Source AI Gateway, you will first need to configure your API settings. Create aConfig.tomlfile with your API configuration, including your API key, model, and endpoint. For example:

Next, run the gateway using Docker. Use the following command to start the container with your configuration mounted:

docker run -p 8080:8080 -p 8081:8081 -p 8082:8082 -v $(pwd)/Config.toml:/home/ballerina/Config.toml chintana/ai-gateway:v1.2.0

Once the gateway is running, you can start making API requests. Use the followingcurlcommand to interact with your gateway:

curl -X POST http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -H "x-llm-provider: openai" -d '{"messages": [{"role": "user","content": "When will we have AGI? In 10 words"}]}'

Key features of the Open Source AI Gateway include:

Multi-Provider Support: Seamlessly integrate with various LLM providers.

Smart Failover: Automatic failover between providers ensures high availability.

Intelligent Caching: Built-in caching system for cost savings.

Admin Dashboard: Monitor usage, tokens, and errors.

Ready to see what Open Source AI Gateway can do for you?and experience the benefits firsthand.

Key Features

Multi-Provider Support

HTTP or gRPC

Smart Failover

Intelligent Caching

Rate Limiting

How to Use

Visit the Website

Navigate to the tool's official website

What's good

What's not good

Open Source AI Gateway Website Traffic Analysis

Visit Over Time

Geography

Loading reviews...

Introduction:

Open Source AI Gateway is a robust, production-ready solution designed for developers to efficiently manage multiple large language model (LLM) providers. Its key benefits include seamless integration with top providers like OpenAI and Anthropic, along with features such as automatic failover for high availability and intelligent caching for cost savings. The platform also offers a user-friendly admin dashboard for monitoring and configuring gateway performance.

Added on:

Mar 26 2025

Company:

AI Gateway

Features:

Multi-Provider Support, HTTP or gRPC, Smart Failover