Deepseek R1

Passionate Team. Award Winning Software.

What is Deepseek R1?

Deepseek R1 is an advanced AI model that utilizes a mixture of experts (MoE) system, featuring 37 billion active parameters out of a total of 671 billion. It is designed to support a context length of 128,000 tokens and is optimized through pure reinforcement learning, eliminating the need for supervised fine-tuning. This architecture allows Deepseek R1 to deliver exceptional reasoning capabilities while maintaining efficiency.

One of the standout features of Deepseek R1 is its cost-effectiveness, as it is priced 90-95% lower than comparable models, such as OpenAI's offerings, without compromising on performance. Additionally, Deepseek R1 supports local deployment, making it accessible for various applications, including those with limited resources.

MoE system with 37B active parameters and 671B total parameters

128K context support optimized through reinforcement learning

Cost-effective pricing compared to competitors

Local deployment capabilities with distilled models

State-of-the-art performance in various benchmarks

Deepseek R1 Features

Deepseek R1 is built on a sophisticated MoE (Mixture of Experts) architecture, featuring 37 billion active parameters and a total of 671 billion parameters, with support for a context length of 128K. This advanced framework utilizes reinforcement learning techniques to enhance its reasoning capabilities, enabling self-verification, multi-step reflection, and human-aligned reasoning.

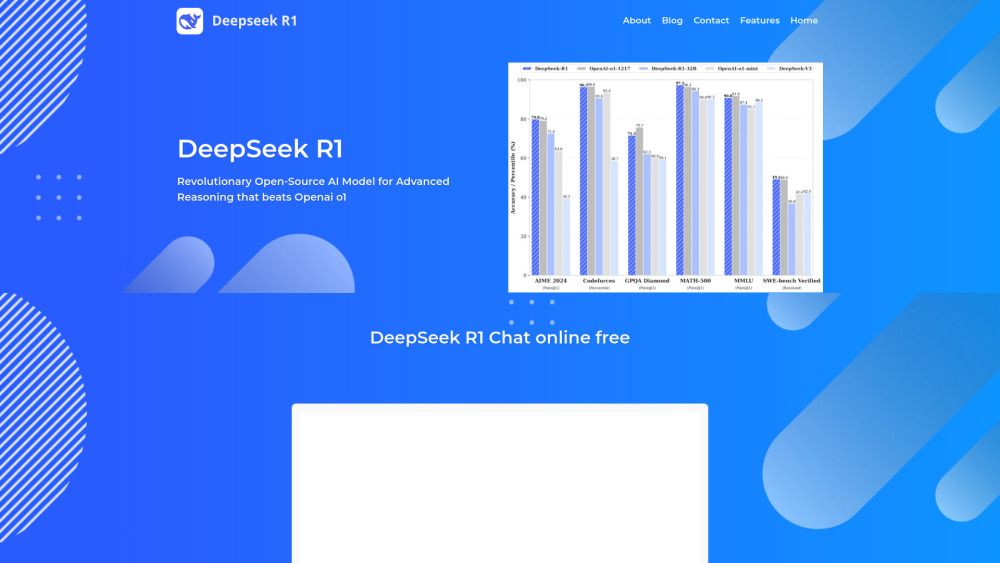

Deepseek R1 has demonstrated exceptional performance across various benchmarks, achieving a 97.3% accuracy on MATH-500, ranking in the 96.3 percentile on Codeforces, and attaining a 79.8% pass rate on AIME 2024. These results position it among the top-performing AI models globally. Additionally, Deepseek R1 offers flexible deployment options, including an OpenAI-compatible API endpoint and open-source access to its weights, with distilled variants ranging from 1.5B to 70B parameters for commercial use.

MoE architecture with 37B active parameters and 671B total parameters

128K context length support

Advanced reinforcement learning for enhanced reasoning capabilities

High performance on benchmarks: 97.3% on MATH-500, 96.3% on Codeforces, 79.8% on AIME 2024

OpenAI-compatible API endpoint at $0.14/million tokens

Open source with MIT-licensed weights and multiple distilled model variants

Why Deepseek R1?

DeepSeek R1 offers a unique architecture built on a Mixture of Experts (MoE) system, featuring 37 billion active parameters and 671 billion total parameters, which allows for an impressive 128K context support. This advanced design is optimized through pure reinforcement learning, enabling capabilities such as self-verification and multi-step reflection. These features empower DeepSeek R1 to solve complex problems with visible chain-of-thought reasoning, making it a powerful tool for various applications.

One of the key advantages of DeepSeek R1 is its cost-effectiveness, priced at 90-95% less than competitors like OpenAI's models, while maintaining equivalent reasoning capabilities. Additionally, it supports local deployment and offers a range of distilled models suitable for resource-constrained environments. The model has achieved state-of-the-art performance in several benchmarks, including a 97.3% accuracy on MATH-500 and a 79.8% pass rate on AIME 2024, positioning it among the top-performing AI models globally.

Cost-effective pricing at $0.14/million tokens

Supports local deployment with various distilled models

State-of-the-art performance in multiple benchmarks

Open-source with full model weights available for commercial use

Learn more and.

Key Features

Chat online free

Award-winning software

Community support

AI-powered coding

Deep research capabilities

How to Use

Visit the Website

Navigate to the tool's official website

What's good

What's not good

Choose Your Plan

Input Tokens (Cache Hit)

- Cost-effective for repeated queries

- Intelligent caching system

- Significantly lower than competitors

Input Tokens (Cache Miss)

- Higher cost for non-cached queries

- Still competitive pricing

- Suitable for varied usage scenarios

Output Tokens

- Affordable output processing

- Cost-effective for high output needs

- Competitive with industry standards

Deepseek R1 Website Traffic Analysis

Visit Over Time

Geography

Loading reviews...

Introduction:

Deepseek R1 is an advanced AI model designed for efficient reasoning and high-performance tasks, utilizing a unique mixture of experts (MoE) architecture with 37 billion active parameters. It offers significant cost savings, operating at 90-95% less than competitors while delivering comparable reasoning capabilities. Additionally, Deepseek R1 supports local deployment and provides various distilled models to cater to different resource environments, making it a versatile choice for developers an

Added on:

Jan 21 2025

Company:

DeepSeek

Monthly Visitors:

105,006+

Features:

Chat online free, Award-winning software, Community support

Pricing Model:

Input Tokens (Cache Hit), Input Tokens (Cache Miss), Output Tokens